When I first explored LLMs and generative AI, I assumed “bigger model, better results.” My early projects proved me wrong—what mattered was matching the approach to the target use case.

I’ve watched RAG systems turn outdated static data chatbots into contextually intelligent assistants pulling current information from real-time enterprise data. I’ve seen fine-tuning transform a generic pre-trained model into a customer service chatbot fluent in niche terminology. And yes, I’ve used prompt engineering to go from nonsense to accurate, tailored outputs in a single iteration.

Executive Summary (TL;DR)

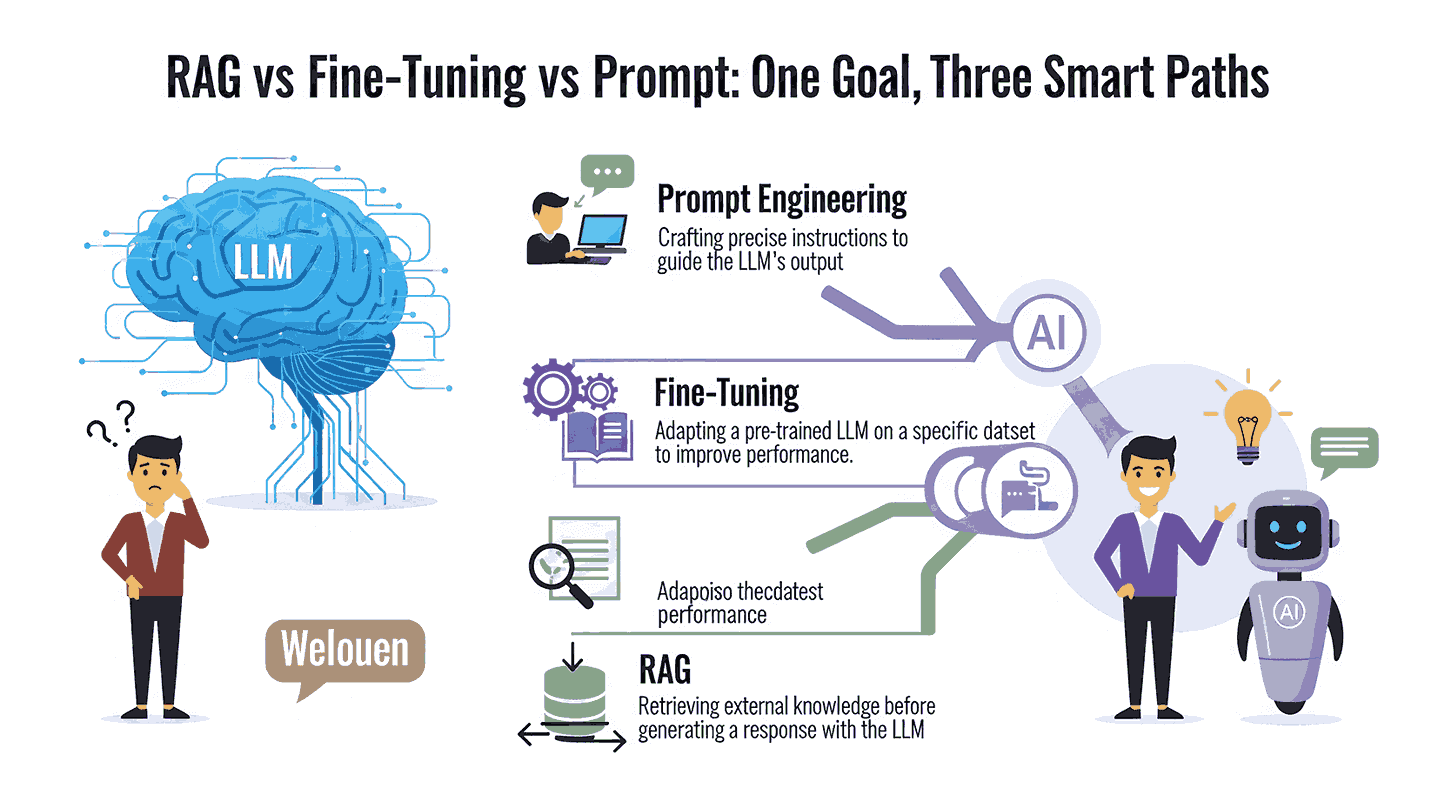

If you want accurate, contextually rich, and cost-effective AI that delivers relevant responses, you have three main approaches:

- Retrieval-Augmented Generation (RAG) → Uses information retrieval from external knowledge bases or internal data to augment a pre-trained model for real-time data answers.

- Fine-Tuning → Adjusts model weights on proprietary data or a specialized dataset for high domain-specific accuracy and downstream performance.

- Prompt Engineering → Carefully designing input prompts with clear instructions to steer model behavior without retraining.

Hybrid strategies often yield optimal outcomes, especially in enterprise AI applications.

Why This Comparison Matters

Choosing the wrong method can be resource-intensive, costly, and time‑consuming:

- Fine-tuning on huge unlabeled datasets when your data requirements are minimal is wasteful.

- Prompting when your goal demands specialized dataset expertise risks inaccuracy.

- RAG without a strong retrieval system leads to irrelevant content.

Your application—whether it’s sentiment analysis, legal query resolution, or real-world knowledge AI data apps—must guide your choice.

What is Retrieval-Augmented Generation (RAG)? How It Works and When to Use It

RAG architecture combines retrieval systems and text generation. Here’s the process:

- The input query triggers the information retrieval mechanism.

- Relevant documents or passages are found using semantic search, vector databases, embeddings, or TF-IDF.

- This retrieved data augments the original context.

- The language model generates coherent outputs with additional context for accurate, grounded answers.

Strengths:

- Pulls external knowledge and up-to-date information from internal and external sources.

- Ideal for open-ended question/answer sessions, healthcare provider tools (pulling medications and treatments), and compliance-ready enterprise systems.

- Flexible and scalable for real-time data retrieval.

Limitations:

- Data dependency—garbage in, garbage out.

- Requires integration with well-curated knowledge bases and private knowledge sources.

- Maintenance of retrieval pipelines and data architecture is ongoing.

What is Fine-Tuning? Key Benefits, Limitations, and Use Cases

Fine-tuning changes a base model to improve domain-specificity. Methods include:

- Supervised Fine-Tuning (SFT) on labeled data for specific tasks.

- Parameter-Efficient Fine-Tuning (PEFT) to cut computational costs.

- Continuous pretraining with large domain-specific corpora for specialized areas.

Benefits:

- Improved accuracy on specific needs like classification, code generation, or sentiment analysis.

- Leverages proprietary data for competitive advantage.

- Creates fully fine-tuned models for tailored, high-quality outputs.

Limitations:

- Expensive and time-consuming—high compute requirements and often resource-intensive.

- Risks catastrophic forgetting of general knowledge.

- Requires data science expertise and advanced technical skills.

What is Prompt Engineering? Best Practices and Real-World Examples

Prompt Engineering is the art and practice of crafting clear instructions to steer model behavior toward desired outputs—without touching model weights.

Best practices:

- Use explicit instructions and prompt templates for consistency.

- Apply Few-shot learning, Chain-of-Thought prompting, and in-context prompt engineering for complex queries.

- Test and tweak for improved accuracy and relevance.

Examples:

- Retail chatbots that retrieve relevant information from a product reviews database.

- Customer support scripts for instant resolution in real-time enterprise data scenarios.

- Creative writing tasks produce hyper-personalized responses for specific users.

The Core Differences: RAG vs Fine-Tuning vs Prompt Engineering

- RAG → Augments with retrieved knowledge for contextually enriched answers.

- Fine-tuning → Alters model weights to specialize in niche topics.

- Prompt engineering → Influences behavior via phrasing, formatting, and instructions.

Comparison Table: RAG vs Fine-Tuning vs Prompt Engineering

| Feature | RAG | Fine-Tuning | Prompt Engineering |

| Data Freshness | Real-time | Static | Depends on base model |

| Cost | Moderate | High, expensive | Low, cost-effective |

| Flexibility | High | Medium | Very high |

| Accuracy in Domain | Medium-High | Very high | Medium |

| Maintenance | Continuous | Periodic retraining | Low |

Pros and Cons for Each Approach

RAG:

- Pros: Current information, contextual relevance.

- Cons: Data dependency, retrieval precision challenges.

Fine-Tuning:

- Pros: Specialized dataset mastery, high accuracy.

- Cons: High computational resources, time-consuming.

Prompt Engineering:

- Pros: Quick answers, low cost, high adaptability.

- Cons: Inconsistency, limited control over the model’s knowledge.

Hybrid Strategies: Combine RAG + Prompting + Fine-Tuning

In practice, balanced approaches win.

Example: RAG fetches current, relevant documents, prompt engineering refines message style, and fine-tuning ensures domain-specific accuracy.

When to Choose Which Method

- Choose RAG if you need real-time, factual accuracy from external data sources.

- Choose Fine-Tuning when downstream performance in specific domains is critical.

- Choose Prompt Engineering for speed, cost-effectiveness, and flexibility.

Practical Decision Framework

- Define the project’s specific requirements and desired outcomes.

- Audit available resources and your data.

- Prioritize optimization techniques that match your Goals.

Conclusion

The smartest AI systems don’t rely on a single method—they combine methods to match context, accuracy, and speed needs. Whether you retrain, augment, or prompt, the key is alignment between the approach and the application.

FAQs

Which is better, RAG or fine-tuning?

It depends on the use case. RAG wins when access to external knowledge and up-to-date information is crucial. Fine-tuning wins for high accuracy, strict structure, and task-specific training data.

Is RAG a prompt engineering?

No. RAG is Retrieval-Augmented Generation. It uses a retrieval system plus Generation. Prompt engineering guides the model with prompts and explicit instructions.

What is the difference between finetuning and prompt tuning?

Finetuning changes model weights through training on a dataset. Prompting uses input prompts to steer model’s generated responses without altering weights.

Is prompt engineering considered fine-tuning?

No. Different methods. You can use them in tandem for optimal results.

Is LLM a rag?

No. An LLM is a language model. RAG is a hybrid nature Approach that retrieves and combines retrieved knowledge with the original input.

Is RAG cheaper than fine-tuning?

Often, yes, for running. RAG setup has moderate complexity, but avoiding frequent retraining is usually more cost-effective.

Sources & References:

I’m a tech and AI entrepreneur who loves building smart tools and products. I start new businesses that use technology like AI to solve real-world problems. I enjoy turning problems into solutions with the help of AI and SEO that help people and make life easier.